AI Bots and Compliance: What You Need to Know About Using AI in Customer Communication

AI bots are now a go-to tool for modern customer service, helping teams deliver quick, efficient, and scalable support. Whether it’s answering simple questions or guiding users through products, these AI tools and agents can speed up responses, boost customer satisfaction, and give your human agents more time to focus on the tough stuff.

But with all these benefits come some important responsibilities. When bots chat directly with customers, they’re not just making things easier, they’re also handling personal data and sometimes making decisions. That’s why these interactions fall under data protection laws, consumer rights rules, and the growing list of AI regulations.

Staying legally compliant is key to:

- Earning and keeping customers’ trust, especially when bots are used in sensitive or high-impact situations,

- Handling personal data the right way, following rules like CCPA, GDPR, and others,

- Avoiding trouble, like fines or complaints from users if an AI bot gives wrong or biased answers,

- Keeping your business running smoothly, since non-compliance can mean losing access to certain markets or partnerships, especially in places like the EU.

As AI rules keep changing, it’s more important than ever to stay on top of how AI tools connect with legal responsibilities. In this article, we’ll walk you through the key things to know so you can use AI bots with confidence, and stay on the right side of the law.

1. What makes an AI bot "compliant"? Key considerations

When you use AI bots to talk to customers, you’re stepping into a space that overlaps with a few key legal areas like privacy, data use, and being transparent with users. Which rules apply can depend on where your business and your users are based, and exactly how your bot is set up to work.

Here’s an overview of the most relevant laws and how they connect to key AI bot features:

CCPA/CPRA (California Privacy Rights Act)

Who it applies to: Businesses handling personal information of California residents.

Why it matters for AI bots:

- Requires a clear notice at collection, including how data will be used so you need to let users know what data you’re collecting and why.

- People must be able to say “no thanks” to data sharing and should have a way to access or delete their data so you have to provide opt out of data sharing and access or delete their data.

- If your bot is using any kind of automated decision-making or profiling, you’ve got to be clear about that too.

FTC Guidance (U.S. Federal Trade Commission)

Who it applies to: All commercial activity in the U.S., especially consumer-facing AI tools.

Why it matters for AI bots:

- The FTC expects truthful, fair, and transparent AI use.

- If users think they’re chatting with a real person when they’re not, that could count as misleading, deceptive advertising.

- It’s also important to make sure your AI bots don’t end up spreading bias or discrimination.

GDPR (General Data Protection Regulation)

Who it applies to: Any organization that handles personal data from people in the EU or EEA.

Why it matters for AI bots:

- AI bots often collect personal data (e.g., names, emails).

- You need to make sure this data is handled legally - usually by getting consent or having a legitimate interest in using it.

- Users have the right to know they’re interacting with an AI and to request access, correction, or deletion of their data.

- Automated decision-making may trigger special protections if it significantly affects users.

EU AI Act

Who it applies to: Providers and users of AI systems in the EU.

Why it matters for AI bots:

- The law sorts AI tools by how risky they are - customer service bots usually fall into the “limited-risk” category but still have to be transparent.

- You must inform users they are interacting with AI.

- In some cases, especially if there’s a lot of automation or personalization involved, you might have to share info about how the AI was trained, tested, and set up.

2. Transparency obligations: Do you have to tell users they're talking to a bot?

One of the biggest, and easiest to overlook, parts of staying compliant is simply being upfront with users. If someone is chatting with an AI tool instead of a human, they have a right to know. Transparency isn’t just good practice - in many places, it’s the law.

According to most regulations (e.g. CCPA/CPRA, EU AI Act) you need to be transparent about using automated tools, like AI bots. That means letting users know from the start that they’re chatting with a bot. Also the FTC has warned businesses that failing to disclose when customers are speaking to a bot could count as misleading or deceptive. While not specific to bots, GDPR supports the right to be informed about automated decision-making.

Safe bot behavior examples

- The bot clearly identifies itself at the start of the conversation.E.g., “Hi, I’m your AI assistant”

- Users are not misled into thinking they’re talking to a human.E.g., avoid giving bots real human names/photos without clarifying their nature.

Risky bot behavior examples

- Using a human name, tone, or image without disclosing the bot’s nature.E.g., “Hi, I’m Emma! How can I help you” with no mention that Emma is an AI.

- Designing conversations to make the AI indistinguishable from a human.

- Making automated decisions (e.g., rejecting a refund) without clear human oversight or explanation.

What Text recommends and provides

At Text we believe that clear, honest communication builds trust. That’s why our platform includes built-in tools to help customers meet transparency standards:

- Add a welcome message that clearly introduces the AI bot.

- Use visual labels (e.g., "AI Assistant" badge or icon) to indicate that the response is generated by automation.

- Easily route users to a human agent when needed.

Now, when you know all of that, let’s explore the legal implications of AI-generated responses.

3. AI-generated responses: risks and responsibilities

AI bots are powerful tools, but they’re not perfect. When an automated system generates messages or advice on your behalf, you have to understand the risks and responsibilities that come with it.

In this section, we’ll cover what you need to know when relying on AI-generated responses - including who’s responsible if something goes wrong, how content ownership works, and why it’s still important to keep a human in the loop.

Who’s responsible if the bot gets it wrong?

While the bot provider (such as Text) provides the underlying AI capabilities, you - the customer, are ultimately responsible for how the bot is configured and used.

Risks may include misleading or inaccurate advice (e.g., telling people their refund is approved when it’s not), inappropriate or biased responses, especially if the AI has been fine-tuned on problematic data, or unauthorized financial, medical, legal etc. advice which could breach laws or professional boundaries.

What we can suggest is to always ensure the content generated by your AI bot is reviewed, guided, or constrained by predefined workflows or human review.

Who owns the content the bot produces?

Ownership of AI-generated responses can be a gray area. Here's how it typically works.

Customers retain rights to content generated through their use of Text’s platform, assuming they provide the input. If third-party models (like OpenAI or other LLMs) are involved, their terms may apply. Most allow commercial use, but may limit rights around sensitive, trademarked, or copyrighted content. While generating AI content it's worth keeping in mind that output purely generated by AI may not be copyrightable in some regions (e.g., the U.S.) unless there's a meaningful level of human authorship.

The importance of human-in-the-loop

Having a human-in-the-loop is a key principle of safe and compliant AI usage, because even the best AI bots can make mistakes.

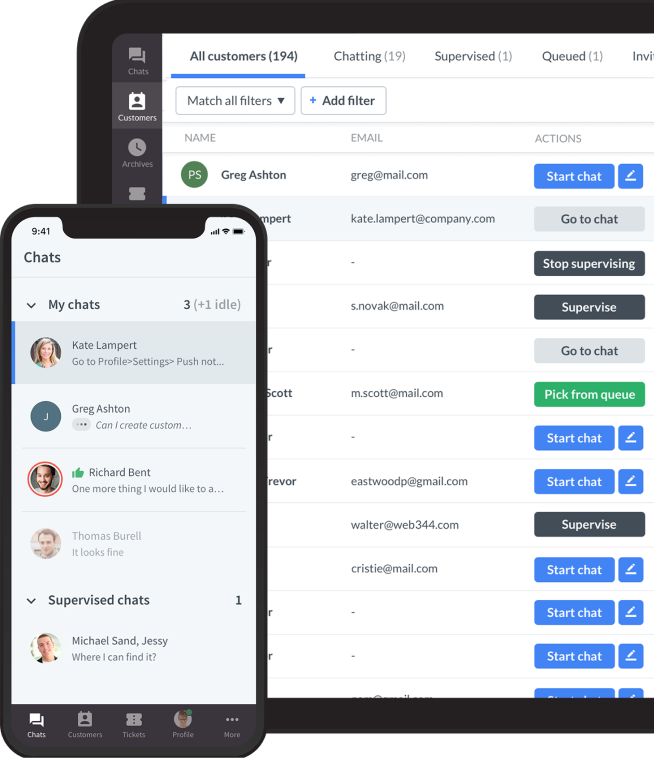

Text supports this approach by offering:

- Live agent fallback options when the bot can’t handle a request

- Manual approval flows for messages

You have full control over how your AI bot works and responds. AI responses are generated only when there is no message predefined by the AI bot account manager.

Let’s now check how Text helps you reduce risks, build trust, and maintain control over AI-powered communication.

4. How Text helps you stay compliant

Our platform is created with compliance by design principles and it comes with a bunch of helpful features to support you in meeting key requirements.

Key features we offer:

- Our AI bot allows you automatically track bot interactions. This may be useful for responding to users’ requests or audits. Debug mode view lets you see a more detailed picture - you can see exactly which actions, blocks, and steps the bot triggered to send a message to your users.

- Configure your AI bot to display messages, like “I am AI bot,” tailored by language, or use case.

- You can offer users choices to opt out of bot interactions or profiling.

- Set how long bot conversations are stored and ensure easy deletion when needed, supporting right-to-be-forgotten requests.

Text partners only with trusted AI model providers and fine-tunes its systems to make sure they meet high standards for fairness, security, and performance. We regularly test our AI to catch things like bias, inaccurate answers, or harmful content, and we keep our tools updated to reflect the latest legal rules, ethical guidelines, and product improvements.

5. Text’s commitment to continue responsible AI usage

At Text, we believe that compliance is an ongoing process. That’s why we are committed to:

- Monitoring legal developments in AI and data privacy worldwide.

- Updating our platform features to meet new compliance obligations as they emerge.

- Providing educational resources (like this article) to help customers navigate changes.

- Partnering with customers to ensure their AI deployments remain safe, ethical, and user-friendly.

We’re keeping our fingers crossed for your smooth compliance with AI standards, and we hope this article gives you some helpful clarity along the way.

If you have questions about AI compliance, or how to configure your bot feel free to reach out our Support Team via LiveChat or email support@text.com.

You can also check out more resources in the Text Help Center, like our AI Trust Policy and Text’s AI Code of Conduct.

Disclaimer: This article is for general informational purposes only and does not constitute legal advice. For specific legal guidance, please consult with a qualified attorney or your organization’s legal team.